Semi-random notes on programming, adoption, and life in general

Thursday, December 14, 2006

TiVo now placing ads on the delete screen

If TiVo can do stuff like that to improve their bottom line, I'm all in favor of it. They are saying: "Here's some advertising If you want to see it, click on it." I still have the choice in viewing the ad or not. If it keeps TiVo from going under or having to raise the subscription fees, then it's a great thing. I do wonder how the advertising is selected. Is it targeted by the show that was recorded, or is it more random?

One thing TiVo could do is to display subtitles when you fast forward through a commercial. That way if you were skipping over a commercial and you could still get the message of the commercial. You might see something that makes you stop and view that commercial. The technology is already inplace, subtitles are already encoded in the TV signal.

Thursday, November 09, 2006

It looks like Genesis is going back out on the road. Phil, Mike, and Tony will be doing 20 shows in Europe and 20 in the US for the "Turn It On Again" tour of 2007. Originally, the five members from the classic '70s lineup met to see if they could schedule a set of shows of "The Lamb Lies Down on Broadway", but Peter Gabriel declined due to scheduling conflicts. With Peter out, it didn't make sense to include Steve Hackett as the four member version of Genesis was only a tiny slice out of their history. You can view their news conference where they discuss all of this from this shiny link.

With just 20 shows in the US, I don't have my hopes up for a local appearance.

Wednesday, November 08, 2006

Sometimes there is a free lunch

I had a nice little surprise in my email yesterday. It was a gift certificate from VSoft Technologies for a one free license for Automise. Evidently I received it because I own a license for FinalBuilder, a fantastic tool for automating product builds.

With FinalBuilder, I can create a new build of one our applications from source control, through compiling, to building the installers, to sending out email to all interested parties that a new build is out.

Automise is basically FinalBuilder, but without the compiler specific tools. If you have a batch process that uses a motley collection of batch files and command line tools, then Automise will make your life simpler.

Tuesday, October 31, 2006

Why doesn't {$REGION} work above the interface?

Our Delphi code has some standard header comments at the top of the unit. It has some information about the unit and who created it, and the purpose of that unit. It also has some tags that the version contol system will use to expand. On of those tags is the history of who edited that file. On some of our older files, that list can get quite long.

So I figured since we moved to Delphi 2006, I could wrap those comments inside a {$REGION}/{$ENDREGION} block and collapse that block. I added {$REGION} and {$ENDREGION}, but I couldn't get the collapse button to appear. I tried it elsewhere in the code, worked just fine. Tried a different unit, same problem. Well it's Delphi 2006, maybe restarting it will fix it. Nope.

It turns out that $REGION only collapses after the interface line. I moved interface to above the {$REGION} line and all was good. I couldn't find that factoid documented anywhere.

Tuesday, October 03, 2006

Best description of VMware/Virtual PC ever....

"It’s kind of like Las Vegas. What happens in the virtual machine, stays in the virtual machine."

-Leo Laporte, September 17th 2006

Friday, September 22, 2006

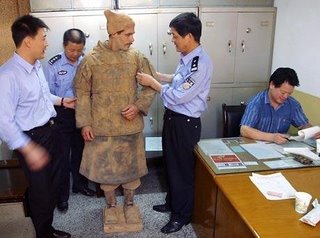

The Terra Cotta Army: 1000 Chinese statues + 1 German art student

This story off of the AP wire I found greatly amusing. A 26 year old art student named Pablo Wendel joined the Terra Cotta warriors in Xi'an. His home made costume was so convincing it took a while for the security guards to find him.

This story off of the AP wire I found greatly amusing. A 26 year old art student named Pablo Wendel joined the Terra Cotta warriors in Xi'an. His home made costume was so convincing it took a while for the security guards to find him.

Friday, September 15, 2006

How to be a programmer

I saw this on secretGreek today:

intellisense

||

\/

code >>> compile >>>> run >>> success ;-)

/\ || ||

^^ \/ \/

^^ errors errors

^^ \\ //

^^ \\ //

^^ ||

\\ \/

\<<<<<<< cut N paste

I have days where this is my programming model.

Thursday, September 14, 2006

Where is "Step Into" on my Visual Studio 2005 Debug menu?

I was debugging some code in VS 2005 when I noticed that I could no longer find "Step Into" on the debug menu. I was already used to the keyboard short cut of F11, but I was wondering why it was no longer on the menu. After some googling, I found a post from Paul Litwin's blog that explained how to fix it. Basicly, the IDE thinks that you just don't want to see that menu item and you just need to reset your profile. Here are the steps that Paul listed on his blog:

- Click the Tools->Import and Export Settings menu item

- Choose Reset all Settings

- Don’t save your settings

- Pick a new profile

- Profit!

OK, I couldn't resist the last one. The $64,000 question is why this happened in the first place. When Paul posted his blog entry, VS 2005 was still new and shiny and the thought was that a prior installation of VS 2005 (beta?) had some how screwed up the settings. That didn't match my setup, I avoided the VS 2005 beta like the plague. I do have VS 2003 installed, that may have confused VS 2005. I also installed SQL Server 2005 before VS 2005, that too could confuse VS 2005.

Tech Tags: Step+Into Visual+Studio+2005 VS+2005 Underpants+Gnomes

Monday, September 11, 2006

Surfing with Takeshi Terauchi and the Bunnys

While skimming Boing Boing, I came across a link to some MP3s by Takeshi Terauchi. These files come courtesy of WFMU's Beware of the Blog. Takeshi Terauchi plays surf guitar music the old schol way, these MP3s were from recordings that are four decades old. They sound the Ventures with backed by a traditional Chinese opera (in a good way). WFMU has two complete albums, This is Terauchi Bushi, and Let's Go Classics.

While skimming Boing Boing, I came across a link to some MP3s by Takeshi Terauchi. These files come courtesy of WFMU's Beware of the Blog. Takeshi Terauchi plays surf guitar music the old schol way, these MP3s were from recordings that are four decades old. They sound the Ventures with backed by a traditional Chinese opera (in a good way). WFMU has two complete albums, This is Terauchi Bushi, and Let's Go Classics.

Let's Go Classics is a surf guitar interpretation of traditional classical pieces like Blue Daube Waltz and the theme from Swan Lake. Very different.

Takeshi Terauchi started out as a sideman in Country/Western band, Jimmy Tokita & the Mountain Playboys. In 1962, Terauchi formed his first Eliki (Japanese slang for Electric) band, the Bluejeans, becoming Japan's first Surf band. In '66, Terauchi quit the Bluejeans and formed the Bunnys, the group behind the two albums on WFMU.

Thursday, September 07, 2006

THIS IS YOUR REPLACEMENT TAG

I'm on my third E-ZPass tag. The battery in the first one died after about 5 years. I have had the second one for another 5 years. This time, the E-ZPass people sent me a new one before the current one died.

They did it the right way. The new tag came in a small envelope with a letter and a return envelope. The return envelope was for the old tag so it could be sent back. It was a pre-paid envelope, a nice touch. The letter explained that the new tag had to be sent back, or I would be charged for it's replacement cost.

They clearly labeled the serial number of the old tag and of the new one. I have two tags in use, I had to check each car to grab the right one.

The replacement tag had a eaisy to peel off sticker with the following text: THIS IS YOUR REPLACEMENT TAG. Seems kinds of obvious, but if you are fooling around in your garage with the tags, that makes it easy not to mix up the old tag with the new one.

They could have mentioned which car the tag was for. The only way to tell was by the serial number on the front of tag. I could read it just fine, but it's in small print and that's not too friendly for AARP crowd. That would be the only thing I would have changed with how they handle replacements.

More companies should study how the E-ZPass program is run. The hardware just works, no fuss or muss. We recentl;y drove from Albany NY, to Corolla, NC and just about every toll bridge and road accepted the E-ZPass. The only exception was the Chesapeake Bay Bridge-Tunnel, and they are in the process of implementing an electronic toll collection system (E-ZPass).

Why you wont see Linux on Corporate America's desktops just yet

I came across this blog post by Robert Scoble while checking something for a GPS data collector that I writing, it has a useful data point to file in the back of the mind. It has nothing to do with GPS data collection or even what I was googling for, but it explains a very good reason why you won’t see Linux on corporate desktops for the conceivable future.

I can sum it up for you with one sentence: Linux fonts and their technology are no match to Windows and the Mac.

I'm not bashing Linux, I have a few things that run on it at home. Not paying the Microsoft tax makes perfect sense for devices using embedded OS's. I love my TiVo and the fact that it uses an OS that doesn't blue screen. My $70 router/access point is running a pretty cool 3rd party firmware that could only be done if it was using Linux.

But on the desktop, I want a desktop that easy on the eyes. The quality of the fonts and how they are displayed are a huge part of that. Microsoft recognizes that and they spend a lot of time and money on fonts. Consolas is a very nice typeface for pounding out code. It's not everyone's cup of tea, but I like it.

Monday, September 04, 2006

Project Bluemoon

Dave Zatz uploaded to YouTube a file that's relatively hidden on TiVo's web site which details the mysterious orgins of the TiVo device. Tune and watch "Project Bluemoon".

Dave Zatz uploaded to YouTube a file that's relatively hidden on TiVo's web site which details the mysterious orgins of the TiVo device. Tune and watch "Project Bluemoon".

You can also download the file as an .mp4 video directly from TiVo's web site (50 mb) from www.tivo.com/bluemoon.

There looks like there are some subliminal text message in the video, I couldn't navigate YouTube's controls with enough precision to get the frames with the text. It was a more subtle effect than the blipverts used in some GE commercials than ran back in May.

Friday, September 01, 2006

TiVo Series 3: Looks cool, pretty costly

I've been drooling over reading the various articles and blog postings over the soon to be released TiVo Series 3.

It has the stuff I want: Ethernet support built in, dual tuners, cablecard, High Def, big honking hard drive (plus external SATA port). The rumored list price is $795. That's a lot of shekels. I have two Series 2 units and $800 is too much for my pocket. Maybe next year if the prices drop...

I read a rumor that they are finally addiing WPA support for their 802.11g adapter. I don't know how accurate that rumour is, TiVo has been planning on supporting WPA for nearly two years.

It's stunning that they have gone so long without it WPA. When I first bought my TiVos, I had them on the home network using a wireless connection. I did it a little differently than most people. TiVo supports a limited range of USB connected wireless network adapters. TiVo supports WEP, but not WPA.

WEP is fairly trivial to crack, while WPA is pretty secure. Our neighborhood is pretty noisy on the 2.4ghz spectrum, everybody and their mother has a wireless router/access point. I had set up the wireless part of my home network to be 802.11g with WPA security. TiVo's wireless solution was not going to work.

There is more than one way to proivde wireless ethernet. I picked up a couple of SMC 2870W wireless bridges. A wireless bridge converts an Ethernet to 802.11 (a/b/g/n) signal. It works transparently to the Ethernet equipped device. That device thinks it's on a wired network and there are no drivers to install. The 2870W supports WPA right out of the box. The radio on the 2870W is lot more powerful than the run of the mill USB wireless adapters, it's like having mini access points on each machine.

With the TiVo, I use a cheap Linksys USB to Ethernet adapter and connect that adapter to the bridge. The TiVo thinlks it's on a wireless network and everyone is happy. There is an additional performance benefit by offloading the 802.11g processing from the TiVo to the 2870W. The bottleneck becomes the 11 mb/s speed of the USB connection. The TiVO CPU is not the fastest in the world. Its roughly equivalent to Pentium 100, no need to have it tackle 802.11 processing if it doesn't have to. That's probably the reason it didn't support WPA, just not enough horse power under the hood.

The Linksys/SMC combination worked very well, I never had any problems. I had more problems using a Linksys 802.11g adapter on Windows XP box on the same network and in the same room as one of the TiVos.

I ended up running CAT5e cable through the house when I swtched to FiOS and now the TiVos are wired to the network. If you can do that, life is much easier. With each TiVo directly on the network, I can transfer shows between the TiVos in real time. I'm still hobbled by the Series 2 USB speed (or lack thereof), the Series built in Ethernet adapter would be cool to have.

Thursday, August 31, 2006

Diagnostic mode for Ipod Shuffle

Shuffle Lights

Main

| Solid orange | Charging, OK to disconnect. |

| Blinking orange | Mounted, do not disconnect. |

| Solid green | Connected to charger: fully charged, OK to disconnect. Not connected: play. Light will turn off after two seconds. |

| Blinking green | Paused: light will turn off after one minute. |

| Solid orange when you press a button | Hold is on. To turn it off, hold the Play/Pause button for three seconds. |

| Solid green when you press a button | Normal operation of that button. The light stays on as long as you press the button. |

| Blinking green and orange | You're trying to play a song, but the shuffle is empty. |

Battery Status (when pressed)

| Green | Good charge. |

| Amber | Low charge. |

| Red | Very low charge. |

| Off | No charge. |

courtesy of Craig A. Finseth

I just took a MSDart to the head and I don't feel so well right now.

One of clients went to run some of our applications on their Windows Server 2003 SP1 box and it wasn't very pretty. They got the error message:

"The procedure entry point DefWindowProcI could not be located in the dynamic link library msdart.dll"

Not a very helpful message. It happens when we access an ADO object within our code. We couldn't duplicate the problem on our W2K3 SP1 boxes. Some searches through Google seem to point the figure at a corrupted MDAC stack with SP1. When our client rolled SP1 back, the problem went away. Microsoft seems to be aware of a similiar problem as they have a KB article, 889114, that describes a similiar issue in Msdart.dll, but not with W2K3. I have also seen references to another article, 892500, but that one refers to DCOM permissions. I'm not sure if that one is relevant.

The question now is how to resolve this issue. How many ways can SP1 be installed on Windows Server 2003? Our boxes got SP1 through Windows Update and they have the right version of Msdat.dll.

Leave that thread priority alone

During initial e-Link web service development, I played around with lowering the priority of a background processing thread. It didn't need to run in real time and having the service handling client requests was more important. For a background housekeeping thread, I lowered it's priority to BelowNormal.

Well that didn't work quite the way I expected it. That thread never ran, or it ran so seldom that it was effectively useless. So I removed the calls to lowering the thread priority and life was good again. I chalked it up to one of those thread things not to touch and moved on to other tasks.

Today, I saw a posting on the Coding Horror that explained why you should never mess with thread prioirities. If you have a thread running at a lower priority and it enters a sleep state, it may never wake up if another thread with a higher priority continues to run. That's an oversimplification, the actual details are described better here by Joe Duffy.

The moral of today's story is "Set up your threads at normal priority and let the operating system deal with scheduling them"

Thursday, August 24, 2006

Stream reading in C#

I was banging my head against the wall with an odd stream reading problem. I was making a web service call as straight http, no SOAP, when I hit a snag reading the response back. I was making the request with a HttpWebRequest object and getting the HttpWebResponse response back by calling the HttpWebResponse GetResponse() method. From the response object, I was using GetResponseStream() to get at the content. The data coming back was of variable size. You would get a fixed size header block, plus a number of fixed sized data entries. The header block had a field to say how many data blocks there would be.

Naively, I thought I could just use a BinaryReader on the data stream and read x number of bytes in for the header block. The I would parse that header to the get number of data blocks and then call Read() for that number of data blocks. Let's say that the header block was 64 bytes in size and the data blocks were 32 bytes. I had logic like the following:

HttpWebRequest req = (HttpWebRequest)WebRequest.Create(uri);HttpWebResponse resp = (HttpWebResponse)req.GetResponse(); Stream stream = resp.GetResponseStream();

BinaryReader br = new BinaryReader(stream);

byte[] buff = new byte[Marshal.SizeOf(typeof(MyHeader))];

c = br.Read(buff, 0, 64);

GCHandle handle = GCHandle.Alloc(buff, GCHandleType.Pinned);

MyHeader header = (MyHeader)Marshal.PtrToStructure(handle.AddrOfPinnedObject(), typeof(MyHeader));

handle.Free();

LogEntry MyLogEntry;

for (int i=0; i < MyHeader.EntryCount; i++)

{

buff = new byte[Marshal.SizeOf(typeof(LogEntry))];

c = br.Read(buff, 0, 32);

if (c == 32)

{

handle = GCHandle.Alloc(buff, GCHandleType.Pinned);

LogEntry = (LogEntry)Marshal.PtrToStructure(handle.AddrOfPinnedObject(), typeof(LogEntry));

handle.Free();

}

}

The problem was that c was sometimes less than 32. My bytes were disappearing. I did some quick sanity check code like this:

c = br.Read(buff, 0, 8192);

TotalBytes = c;

{

w.Write(buff, 0, c);

c = br.Read(buff, 0, 8192);

TotalBytes += c;

}

When I ran that, TotalBytes had the expected number. What was I missing? A little bit of googling found this bit of extremely helpful information from a guy named Jon. I was reading while data was still coming into the stream. The Read method is going to return before all of the data has been written to the source stream, I had to read the stream into a holding array, by reading it as chunks, until there were no more bytes. Then I could read the data from the array. This was so obvious, I can't believe I missed it. The ReadFully() method that Jon supplied worked quite well.

In case you were wondering about the GCHandle stuff, that was needed to marshall C style structures into C# structures. Getting that bit of code to work right is another story....

Vista performance (or lack of)

I just installed the Beta 2 of Vista on one of my dev boxes. It used to be my primary development box until I got a bright shiny new one last year. About two weeks ago, the hard drive had a massive failure (it needs to be defraggled at this point) and I needed to rebuild the box. Since we had the Vista DVD, I figured why not. We set it up as a dual boot, with XP as the "other" OS.

After a couple of days of use, I've come the this conclusion. Vista is a pig. It's slow to boot and slow to run. It's running on an older box, P4 1.7ghz with 1GB of RAM, but that box is fast enough to run XP without any issues. It's slow enough that I am not going to use it as a day to day OS. I'll run XP as the primary OS and I'll manually boot into Vista when needed. The performance issues come with the beta tag, that's all and good. I just can't use it. It felt like OS/2 on a 386.

The fun part was trying to figure out how to get XP back as the default OS. The new Vista Boot Loader is a strange and wonderful beast, but it's not your father's boot.ini file. With boot.ini, it's a trivial process to set the default OS. Vista requires you to use a new command line tool named bcdedit.exe. With bcdedit, you can specify the default OS, by using the /default parameter and the GUID of the OS to run.

The GUID of the OS? Where the frack do I get the GUID of the OS? If you run BCDEDIT /enum all, you get a listing of everything BCDEDIT knows how to load and the includes the GUID. Except for my XP, which didn't get one. Apparently that's a magic number, if you run bcdedit /default {466f5a88-0af2-4f76-9038-095b170dc21c}, the legacy OS becomes the default.

Since I'm using the Vista Boot Loader, I'll need to remember to restore XP's boot loader before I rip out this Vista Beta for next one. In the Vista section of Tech-Recipes, they have helpful information on how to do that. What you need to do is the following:

- Reboot using the XP CD-ROM

- Start the Recovery Console

- Run FixBoot

- Run fixmbr to reset the Master Boot Record

- Exit the Recovery Console

- Reboot

Lifehacker has some tips on how to setup the dual boot here.

Friday, August 18, 2006

Back from vacation

After a couple of weeks of vacation, I came back to the office to find my old development book DOA. It was worse than a Blue Screen, it was a black screen with Read/Write error message. My C: partition was filled with CRC errors. I could copy some files off it, but most were toast. A second parition on the same drive reported no errors, so I don't think it was a drive failure. We did have a brown out while I was away, and that box only had a surge protector to save it. Which it didn't.

I was planning on on installing the Vista Beta 2 in a VMware session on my main development box, but I think it will go on the old box. I have to reinstall the OS anyways, why not go with the beta. It's not like I have anything to lose at this point.

This post and the one before it was created with Windows Live Writer (Beta). It's a pretty cool blog entry tool from Microsoft. What is really nice about it is that it reads your blog setup and you can edit the posts in the look and feel of your actual blog. It just works. It didn't display the map below, but it showed where it would be.

Another cool feature is that you can edit previous posted entries and it replaces the old post with the new post. This is my 3rd attempt on this post.

We spent a week down on the Outer Banks in North Carolina. We have been going on and off down there for the last ten years and I like it alot. This year, we stayed in Pine Island, on the southern end of Corolla. I played a bit with Wikimapia and got this map of where we stayed:

The house in the lower left corner is where we stayed. I tell you the name, but I want to rent it next year...

Dates are not numbers

One of the other developers that I work with had a question about inserting some date values into a SQL Server database. The code in question is doing a batch insert and it was implemented as a series of INSERT statements and they get executed in large batches. He was having some difficulty in getting the right values for the dates. He was formatting the INSERT statement with the datetime values being formatted as numeric values. The end result was that the dates were off by two days. It was easy to fix and is yet another example of a leaky abstraction can bite you in the ass.

Dates are not numbers. You have to consider them to be an intrinsic date type, even if the environment handles them internally as floating point numbers. It's easy to get into the habit of adding 1.0 to datetime variable to increment the time by one day. SQL Server will happily let you do so, and so do many programming languages. Oddly enough, Sybase Adaptive Server Anywhere wont let you treat datetime values as numbers, they force you to do it the right way.

The problem is how each environment anchors that numeric value to the actual calendar. What day is day 0? It depends on what created that value. For SQL Server, the number 0 corresponds to 1900-01-01 00:00:00. In the Delphi programming environment that we do a lot of work in, 0 works out to be 1899-12-30 00:00 (a Saturday for those keeping score). If you pass in a datetime as numeric, when you query it back out of SQL Server, it's going to two days ahead of from your original date.

The 1899 date was define by Microsoft when they defined their OLE Automation data types. The .NET runtime uses 0001-01-01 as it's starting point. Those are not the only ways datetime can be encoded. Raymond Chen did a decent round up on his blog.

The way we usually pass in datetime variables in non-parameterized INSERT statements is to encode the datetime variable as a string in the standard SQL-92 format (yyyy-mm-dd hh:mm:ss).

Tuesday, August 01, 2006

Hidden gotcha in FreeAndNil()

So I'm pounding through the code and making sure that everything gets created, gets freed. Great fun, I recommend it for the entire family. I'm starting the service (actually the app version of service, but that's another posting), then exiting it after it initiatizes. That way I can clear out all of the obvious suspects and then turn my attention to the serious memory leaks.

So I'm in the middle of doing this, when one of the objects that I am now explicitly freeing is now blowing up when I free it. And not in a good way. This object, let's call him Fredo (not really the name), owns a few accessory objects (call them Phil and Reuben). In Fredo's destructor, Fredo is destroying Phil & Reuben. In Phil's destructor, Phil references another object belonging to Fredo and blows up because Fredo has gone fishing and doesn't exist anymore.

It took a while to figure out what was going on. You see Fredo wasn't actually fishing, Fredo was still around. Phil was accessing Fredo through a global variable (bad legacy code) because Fredo was a singleton. The variable that reference Fredo had been set to nil, even though Fredo was still in existence.

It took a while, but I figured where and how I had broken Fredo. The code that I had added to destroy Fredo looked like this:

FreeAndNil(Fredo);

The FreeAndNil() procedure was added back around Delphi 3 or so. You pass in an object reference, it free's that object and sets the reference to nil. Horse and buggy thinking for the managed code set, but useful in non-managed versions of Delphi. The problem was that FreeAndNil doesn't exactly work that way. Let's take a quick peek at that code:

procedure FreeAndNil(var Obj);

var

Temp: TObject;

begin

Temp := TObject(Obj);

Pointer(Obj) := nil;

Temp.Free;

end;

It's setting the variable to nil before it free's it. It's not how it's documented and it caused my code to fail. There's nothing wrong with how FreeAndNil is coded, by setting the variable to nil first, other objects can check to see if it still exists and not try to access that object while it's being destroyed. I just would preferred that the documentation more accurately described the actual functionality.

Friday, July 28, 2006

Poor man's guide to memory usage tracking

What I want is to log the memory usage to a text file, with each entry timestamped. I was able to do this with almost all off the shelf parts. I did have to write the timestamper, but that was a trivial task. Since the home viewers will not have my service, pick a service or app of your own and play along. I'll describe what I did using FireFox as a substitute for the actual service.

In the excellent PsTools suite over at SysInternals site, there is a utility named PsList. It's a combination of the pmon and pstat tools that works like a command line version of the "Processes" tab of Task Manager. By default it lists information for all running processes, but you can filter it by service name or process ID. I wrote a batch file to call PsList with the service name and the "-m" command line switch to print the memory usage. PsList prints some banner information with the details. Something like this:

PsList 1.26 - Process Information Lister

Copyright (C) 1999-2004 Mark Russinovich

Sysinternals - www.sysinternals.com

Process memory detail for Kremvax:

Name Pid VM WS Priv Priv Pk Faults NonP Page

firefox 3936 108952 41380 32748 36452 140201 8 54

All fine and good, but not pretty enough for a log file. What I need was just the last line. So I piped the output from PsList through the good 'ol FIND command with "firefox" as the filter text. With that, I can redirect the output to a file (with append). I ended up creating a batch file named memlog.cmd that had the following commands:

pslist -m firefox | find "firefox" >>c:\logs\memuse.txtThat gave me the last line in a file. But I still needed the time stamp. I thought about going through some script file sleight of hand with ECHO and DATE, but this is the Windows Server 2003 CMD.EXE. It doesn't have that skill set. I could do with some 3rd party shells, but the goal is something I can deploy on a remote site without anyone having to pay for a tool or go through the hassle of installing something like Power Shell.

Time to fire up Delphi and create a little command line app that would take text coming in as standard input and send it back out as standart output, but with a timestamp prepended to the text. The source code has less text in it than the previous sentence. If you have Delphi, the following code will give you that mini-tool. I used Delphi 7, any of the Win32 versions should do.

program dtEcho;

{$APPTYPE CONSOLE}

uses

SysUtils;

var

s: string;

begin

ReadLn(s);

WriteLn('[' + FormatDateTime('yyyy-mm-dd hh:mm', Now) + '] ' + s);

end.

There's no banner or error checking. I didn't need any of that and I wanted to keep it light. By adding dtEcho to my batch file like this:

pslist -m firefox | find "firefox" | dtecho >>c:\logs\memuse.txt

I now get output like this:

[2006-07-28 23:14] firefox 3936 536904 61324 51244 57384 445377 90 249The output only goes down to the minute, I'm tracking the memory usage every 10 minutes, I didn't need to make the timestamp that granular. If I needed it, I just need to make a slight change the dtEcho source code and it will print the seconds.

[2006-07-28 23:15] firefox 3936 538176 60844 50764 57384 449193 91 249

[2006-07-28 23:16] firefox 3936 538212 60620 50528 57384 455935 91 249

To run that batch file, I just used the scheduled tasks control panel applet and set it to run off of my account. For remote deployment, that would probably be the hardest step.

Thursday, July 27, 2006

SQL Server WHERE clause tip (not needed for SQL Server 2005)

CREATE TABLE [MyLog](

[RecordID] [int] IDENTITY(1,1) NOT NULL,

[LogTimeStamp] [datetime] NOT NULL,

[Duration] [decimal](12, 4) NOT NULL,

[SessionID] [varchar](40) NOT NULL,

[IP] [varchar](24) NOT NULL,

[Request] [varchar](80) NULL,

[Response] [varchar](80) NULL,

[Error] [varchar](255) NULL,

[Description] [varchar](80) NULL,

CONSTRAINT [PK_MyLog] PRIMARY KEY CLUSTERED

(

[RecordID] ASC

) ON [PRIMARY]

) ON [PRIMARY]

GO

CREATE NONCLUSTERED INDEX [SK_MyLog_LogTimeStamp] ON MyLog

(

[LogTimeStamp] ASC

) ON [PRIMARY]I would execute the following SQL statement;

DELETE MyLog WHERE DATEDIFF(DAY, LogTimeStamp, GETDATE()) > 30It's pretty simple, use the DateDiff() function to compare the timestamp field with the current date and if it's older than 30 days, delete that record. I implemented that code in the first go around of the code, about two years ago. This week, I was in that area code for some maintenance and I took another look at that statement. That WHERE clause jumped right out at me. For every row in that table, both the DateDiff() and GetDate() functions are going to be called. SQL Server will need to compare every value of LogTimeStamp to see if it is older than 30 days ago. In this case, MyLog has an index on LogTimeStamp, but it will has to read the entire index. GetDate() is a nondeterministic function, it's going to get re-evaluated for each row in the database. Since the actual date comparison is against a constant value, I decided to evaluate the comparision date first and change the WHERE clause to a simpler expression.

DECLARE @PurgeDate smalldatetime

SELECT @PurgeDate = DATEADD(DAY, -30, GETDATE())

DELETE MyLog WHERE LogTimeStamp < @PurgeDateI added a smalldatetime variable and assigned to it date of 30 days ago with the DateAdd() and GetDate() functions. Now SQL Server can use the value of @PurgeDate to jump into the index and jump out when the date condition no longer matches the criteria. By I implemented this on SQL Server 2005 and when I evaluated the estimated execution plans for each delete statement, I was surprised to see identical plans. Both sets of statements spent the same percentage of time doing scanning and deleting.

When I did the same evaluation on SQL Server 2000, I saw different results. The first delete statement spent 73% of the time scanning the index and 27% actually deleting rows from the table. The second delete statement spent 19% of the time scanning and 81% of the time deleting rows. On table that could have a large number of rows, it turned out to be big performance saving on SQL Server 2000 installations.

It's pretty cool that the SQL Server 2005 parser is smart enough to optimize code and recognize a constant expression when it sees it. My code would have seen a nice little performance boost by moving from SQL Server 2000 to SQL Server 2005. It's still a better thing to pull constant expressions out of the WHERE clause when you can do that.

SQL formatting courtesy of The Simple-Talk SQL Prettifier for SQL Server.

Friday, July 14, 2006

Migrating to Delphi 2006

Most of our Delphi code has been in Delphi 5 for a number of reasons. We mainly stayed with it because it worked and did what we needed. We did a complete rewrite of flagship app, VersaTrans RP, and Delphi 6 and Delphi 7 were released during that cycle. Delphi 6 didn't add any features that we needed and Delphi 7 came out during regression testing for RP. It would have been insane to switch compilers during final testing. I do use Delphi 7 for our web based application, e-Link RP, mainly because of the SOAP functionality. Now it's time to round up the children and jump on the Delphi 2006 wagon.

One of the packages that I migrated up was Abbrevia. This is an Open Source compression toolkit that includes components for handling PKZip, CAB, TAR, and gzip formats. It used to be a commercial product from Turbopower, but a few years back it became Open Source when Turbopower was bought out by Los Vegas gaming company. Since going Open Source, development has come to a flying stop and nothing new has been released since 2004. I had to tweak the package files somewhat to compile with Delphi 2006.

The distribution came with tons of file, and package files (.dpk) for every version of Delphi/C++Builder from 3 to 7. I took the Delphi 7 package files and edited them outside Delphi to make Delphi 2006 packages out of them. I probably could have done within Delphi, it was easy enough to do with TextPad. I changed the names to match the compiler, Abbrevia uses the the compiler version to differentiate between the various package files. B305_r70.dpk became B305_r100 and B305_d70 became B305_d100. Except they didn't include B305_d70, I had to use the Delphi 5 version B305_d50.

I also changed the file paths around. There is a source folder and a packages folder. I added a bin folder and edited the package files to place all of the compiled units in that folder. I also need to explicitly add the source folder to the package's source path. Then I tried to compile. The runtime package compiled without incident. I moved it's .bpl file to my system32 folder and moved to the design time package. It failed to compile because it couldn't find the DesignEditors unit. The DesignEditors unit contains the base classes for implementing component editors. You used to be able to have that unit in your runtime code, Borland has stopped allowing it. It does come with Delphi, just not on the default search paths.

So I figured I could just add the path to DesignEditors.pas to the package search path. Just add the path and Bob's your uncle. Nope, didn't work. DesignEditors.pas has a typo in it. It's missing a comma in the uses clause of the implementation section. My first inclination was to just add the idiot comma, but it seemed like other things would have failed if that was the case. I was doing it wrong. Instead of adding the source path, I'm supposed to have added the .dcp compiled package, which is in DesignIDE.dcp. Once I added that unit to the package's requires list, everything was good to go.

Thursday, July 13, 2006

Overheard at work today.

"It's Wise, so I really don't ask questions. I just work around it." — Sam Tesla, 7/12/2006

If you've worked with the Wise for Windows Installer, you'll understand...

[Joe White's Blog]

I finally received confirmation from Wise Tech Support that the last bug that I reported was a real bug. You can't install a service written in .NET 2.0 on a machine with the 1.1 and 2.0 Frameworks installed. Wise runs the installutil.exe from the Framework to install the service and they pick the wrong version to run. Of course, it may be fixed for the next release, but that's tentative. My work around of using undocumented methods to self-install will continue to be used.

Friday, July 07, 2006

I don't care about RocketBoom

I first heard about RocketBoom when it became available through TiVo. I subscribed to it for about a week or so, but i cancelled it. I found her schtick to be tiresome and annoying. She's an acquired taste that I just couldn't acquire. Ze Frank is much better at that kind of stuff.

In the end, it will probably work out well for both partners. Congdon has been getting plenty of job offers and RocketBoom has had tons of free publicity.

Tuesday, June 27, 2006

What do the following have in common?

What do the following have in common?

The answer is not this. These are the previously announced features of Microsoft Vista that have been dropped out of the product over the last couple of years. Here’s a link to a good article with the gory details…

At the rate features are being being axed, at what point does Vista come XP SP3? It’s becoming XP with tighter DRM, and who wants more of that? Of all of the stuff that’s been yanked, WinFS was the one that I really wanted. I really hoped that MS could have pulled it off.

On the other hand, it just sounded too complicated to actaully pull off. Having your file system be dependant on SQL Server requires a lot more under the hood with that much more resources required. To a certain extent, the desktop search tools that came out in the last two years made the need for WinFS less important.

Tomato funeral

I saw a reference to “tomato funeral” on a .Net developer’s blog. I had to click through the link and ended up somewhere deep in the bowels of Wil Wheaton’s blog….

My friends and I have, in the past, enjoyed playing a game we affectionaly call "tomato funeral". Often when we find ourselves in close but temporary proximity to a stranger or group of strangers (like being in an elevator) one of us will start the game by turning to the other and asking:

"So then what happened?"

At this point it's the goal of the other person to come up with the most nonsensical but still plausible conclusion to a conversation that will presumably leave the strangers wondering for the rest of the day what possible situation could have led up to that phrase. The game is named after one of the earliest successes:

"Oh, well, she went to the funeral, but, well, you know, I doubt she'll ever eat tomatos again"

Points are awarded for creativity and quickness of response.

http://www.wilwheaton.net/mt/archives/001827.php#c109100

Tuesday, June 13, 2006

Fun with scripted load tests

We are getting ready to do some load tests and it's time to pick some tools. The app that we want to test is a client/server app with buckets of processing going on the client side. Most c/s load test tools just emulate the traffic that goes on between the client and the server. For our app, that wont work, the load occurs on both the client and the app and we have to drive the app. This means one instance of the app per PC. We have tried multiple instances of the app on the desktop or through multiple terminal service sessions, but that just didn't work. We tried that a couple of years ago at at the Microsoft testing facility in Waltham, MA, but it only would work as one app per machine.

So we will just do that here in the office. Some night, after everyone goes home, we'll log into each desktop with a special login (limited access) and run our tests. We are evaluating HighTest Plus, from Vermont Creative Software. It's an automated software testing tool that allows you to record and playback keyboard/mouse actions. What we want to do is to setup a repository of scripts and have a set of PCs run the scripts. The fun part is how to start each testing session without having to walk over to each machine and login and then run the script.

I first looked into somehow getting each machine to login into the testing account. There's no easy way to remotely script this. For security reasons, you have to use the keyboard and/or mouse with the login dialog, you can't bypass that with a script invoked from another machine. We didn't want to install any remote access software like VNC or pcAnywhere. It would cost too much and we didn't want to leave anything running on the machines during business hours. That leaves Remote Desktop. You can script the mstsc.exe client so that you can feed in the login infomation. So we started with that.

We wanted to start the remote desktop sessions and then disconnect from them, with the sessions running. The reason for this was that the machine controlling the tests would end up with 10 to 40 remote desktop sessions and there could be a resource problem. We tried using Sysinternal.com's excellant psxec utility to remotely invoke the HighTest executable. No matter hoiw we sliced it, it would fail with msg hook error message. This meant that we had to invoke HighTest from a process running on the remote pc. After banging a few things around, I created a batch file on the remote pc to launch the HighTest executable and added the the "Scheduled Tasks" list. That worked, so I disabled the task so it wouldn't get launched by accident. The schtasks.exe can be used to run scheduled jobs, even from other machines. Provided that the machine has an open desktop and is not a disconnected session. Disconnected sessions do not receive keyboard or mouse input, not even simulated input from HightTest.

We have once more trick up our sleeves. The remote desktop client (mstsc) can be configured to run a program after you are connected. If you have the "Remote Desktop Connection" dialog open, click on the "Programs" tab and you will be able to configure the program that you want to run. We we will do is to have it run a batch file. That batch file will run the HighTest tool, do any cleanup, and then logout. And that's how we plan on running our load tests.

[Edited on 6/14/06]

Scratch the running of programs from the "Remote Desktop Connection" dialog. That only works if you are connected to a Terminal Server. You get bupkis if you use that option with Windows XP. We are back to looking at using schtasks.exe after starting the remote session with Remote Desktop.

Tuesday, June 06, 2006

Self installing services in .NET

I have some service applications that I deploy with Wise for Windows. These particular services are .NET assemblies. The usual way of registering the .NET assembly as a service is to use the installutil.exe that comes with the .NET Framework. Wise made it easy to register the assemblies by adding a checkbox in the file properties for self installation. Behind the scenes, Wise must be calling installutil, because it fails when you have multiple versions of the .NET Framework installed. Installutil is not compatible across Frameworks. You can’t install a 1.1 assembly with the 2.0 installutil, and vice versa.

Wise does not let you specify which version of the Framework is being used by a particuliar assembly. It should be able to tell through Reflection, but it doesn’t. This means I can’t specify the correct installutil to use for my services. This is not good and causes my install projects to go down in flames. I really can’t wait for Wise to fix this.

I could call installutil directly, but that means putting all sorts of fugly code into the install project to correctly locate the appropriate version of installutil. And that code would probably break the minute Microsoft updates the .NET Framework. So we move to Plan B, self-installing services. You would think that this would be a simple walk through the MSDN garden, but their code examples assume that that task is being handled manually via installutil or through a Windows Installer project.

After a bit of Googling, I found a reference to an undocumented method call, InstallHelper, in the System.Configuration.Install.ManagedInstallerClass class. By using this method, I can install or uninstall the service from the command line.

I augmented the Main() function in the service class to look like this:

static void Main(string[] args)

{

if (args.Length > 0)

{

if (args[0] == "/i")

{

System.Configuration.Install.ManagedInstallerClass.InstallHelper(new string[] { Assembly.GetExecutingAssembly().Location });

}

else if (args[0] == "/u")

{

System.Configuration.Install.ManagedInstallerClass.InstallHelper(new string[] { "/u", Assembly.GetExecutingAssembly().Location });

}

else if (args[0] == "/d")

{

CollectorService MyService = new CollectorService();

MyService.OnStart(null);

System.Threading.Thread.Sleep(System.Threading.Timeout.Infinite);

}

}

else

{

System.ServiceProcess.ServiceBase[] ServicesToRun;

ServicesToRun = new System.ServiceProcess.ServiceBase[] { new CollectorService() };

System.ServiceProcess.ServiceBase.Run(ServicesToRun);

}

}

The “/d” part hasn’t been tested yet. That should allow me to debug the service as an application from within the Visual Studio IDE. As much as I dislike having to use an undocumented class, I’m not going to lose any sleep over it. Microsoft obsoleted documented functions going from Visual Studio 2003 to 2005, I’m not going to worry about one method.

[Edited on 6/8/06]

I updated the block of code for the "/d" part. I needed a timeout to keep the service running, otherwise it just runs through the startup and then exits. You can make it fancier, I just use that code for testing from within the IDE and I can break out of the service when I am done testing it.

[Edited on 7/20/06]

After a few go arounds with Wise Technical Support, I sent them a sample installer project that easily duplicate this bug and they did confirm that it was a problem with their current product. There is also a similiar problem where you can't install .NET 1.1 services under similiar circumstances. Their fix for my problem will fix the .NET 1.1 service problem too. According to the email that I had received, this is tentatively scheduled for the next release. That would probably be the version 7.0 release. In the meantime, I'll stick with my work around.

[Edited on 1/27/08]

The MyService object in the above code is an instance of a System.ServiceProcess.ServiceBase descendant class that I created in my code. The descendant class opens up access to the protecteded OnStart() method. I had created a descendant to ServiceBase and had assumed that was the standard pattern. I should have been more clear about that part. This is one of the many reasons why I abandoned Wise for InstallAware.

Tuesday, May 30, 2006

Leaky Abstractions in Wise for Windows

If NOT Installed AND NOT ARG

..Do thing1

..Do thing2

..Do thing3

..Do thing4

..Do thing5

End If

At runtime, the first three actions inside the block were executed, but not the last two. This was a tricky one to figure out. Wise shows the actions as if they are running in a script, with an actual IF/END IF block. MSI technology is database driven. Instead of a script, you have each action as a row in a table, with the same IF condition defined for each action. Similiar in behavior to a script, but the condition is evaluated for each row. The thing3 action was changing the value of ARG, and when the condition was reevaluated for actions thing4 and thing5, the result of the IF condition had changed. This is another example of how Leaky Abstractions can bite you in the ass.

Programmers are used to seeing procedural code in a script. Wise does a pretty good job of abstracting out database rules to pseudo scripts, but it's not a perfect abstraction. Unless you ran the installer through the debugger, and examine conditions at thing 3 and then at thing4, you would never see what was going on.

Friday, May 26, 2006

Samsung bans its own product

Samsung has a cell phone with a 8gb hard drive (form musinc playing) and they have banned it from their own premises. They don't allow portable memory devices that can be used to smuggle out confidential information. Gizmode has the article here. It's kind of funny, in an ironic sort of way.

Update: Techdirt has a better article here.

Visual Studio .NET 2005 Keyboard Shortcuts

Rants against the machine: Are stored procedures inherently evil?

I sort of agree with his viewpoint, but not completely. I think that using sprocs for most CRUD applications is a waste of time. Adhoc SQL is usually sufficient for that task. But there are plenty of times where a sproc is pretty handy. Our applications are a mixture of Win32 Delphi and C# and work in the same database space. Having some of the business logic at the database level is better reuse of shared code than duplicated code across development platforms.

But I do agree with Jeremy about the additional burdens that come with sprocs. You have to manage the versions. You have the additional burder of having multiple versions of the sproc if you support multiple database vendors (we do SQL Server and Sybase SQL Anywhere). Your programmers need to know more about SQL than "SELECT * FROM SomeTable".

His complaint about the sprocs being out of sync with the code was a non-starter for me. We version our database schema changes with our application code. If the database version isn't in sync with the application version, we force the user to update one or the other. I implemented a simple way to send out database changes with a point and click interface, each new version of our applications is bundled with the database update file that brings the database up to the current application version.

There are performance considerations to consider as well. Once you get past the CRUD, you can get your money out of SQL Server with well designed sprocs. I was able to get 10x improvement recently in one part of a service that I written be replacing a hideously over-complicated adhoc SQL statement with sproc that produced the same results. That sproc split the SELECT statement into multiple statements that stored the individual results into table variables and then combined the individual results into a single result that matched the output of the original SQL statement. Your mileage may vary.

I think we are seeing the swinging of the pendulum from everything must be in a sproc to "sprocs bad, code good". As with most things, I think there's some point in between that has your comfort zone. I'm quite content letting the database layer du jour (ADO/ADO.NET/Code generator) handle the CRUD tasks. When I feel the need for speed, I have no qualms against using "CREATE PROCEDURE".

Thursday, May 25, 2006

Best Buy prank

What happens when 80 people wearing blue polo shirts walk into a Best Buy?

Why it must be a new mission from Improv Everywhere.

Delphi 2006 quirks

In Delphi 2006, it doesn't work that way. The only way you can get access to the .dpr file is to select "View Source" from the "Project" menu. I wonder why they made that change in the behavior. I don't have to directly edit the .dpr files that often, but there are times where I do need to do so.

The other oddity is the lack of the support for the SCC API fo using your preference for source control. It's 2006 people, there's no reason why you can't pick your own SCC provider for use from within the Delphi IDE. I have no interest in the StarTeam source control bundled with Delphi. We dumped VSS for SourceGear's Vault and we have been very pleased it. And I want to use if within Delphi like I can with Visual Studio. While there's no mysterious force preventing me from running the Vault IDE along side the Delphi IDE, it's a concentration killer to leave the coding IDE just to check out the file I need to work on. On small projects that I am the sole owner, I'll just check all of the code out, but on the team projects, you just don't do that. VS spoiled me by prompting me to check a file out as soon as I started editing it.

I've been playing with the 30 trial of EPocalipse's SourceConnXion 3 for that last few days. It provides source control integration for Delphi 2005/2006 and so far, so good. I've added it to the list things for the boss to buy as part of our migration to Delphi 2006.