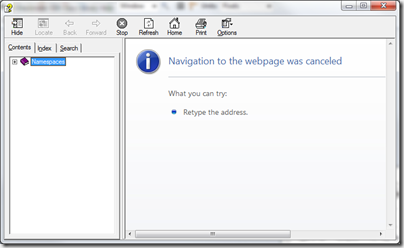

Since Windows directly supports .zip files, I used Windows Explorer (Windows 7) to copy the files from the .zip file to new folder. I then launched the help to examine some new functions the vendor had added for me. The help file loaded up, but I couldn’t access anything. It looked like this:

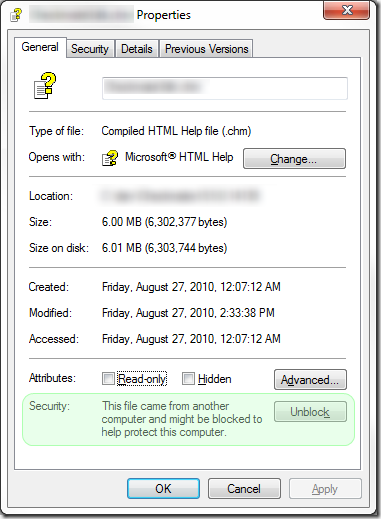

At first I thought the file was corrupt, but then I realized what was going on. With Windows Explorer, I selected the .chm file and right-clicked on it and selected “Properties”.

If you look at the section that is highlighted in green, you’ll see the text ”This file came from another computer and might be blocked to help protect this computer.”. With Windows XP SP2 and later operating systems, the file’s zone information is being stored with the file as stream. A stream is a separate resource stored with the file, just not exactly in the file. Separate resource streams is a feature of the NTFS file system. Since the .zip file had been downloaded with Internet Explorer, the .chm file was treated as if it had been downloaded directly.

This is actually a good thing. By default Internet Explorer will not let you run content from your local disk without your expressed acceptance. Since the Internet Explorer rendering engine is used to render the pages of the .chm file, it’s going to block pages that came from the Internet Zone.

You have a couple of ways of fixing this. One way would to disable the blocking of local content. I don’t think that’s a safe way to operate so I’m not going to describe how to do that. In the file Properties dialog, there is an “Unblock” button. Click that button and you can remove the Zone block.

Another way would be to use a command line tool and remove the Zone Identifier resource stream. SInce NTFS file streams pretty much invisible to the casual eye, you can grab a free tool to trip that data out for you. Mark Russinovich’s Sysinternals collection of utilities includes a nice little gen called streams. It’s a handy little utility. It will list what streams are associated with a file or folder and you delete them. Recursively and with wild cards too. One of the thinks I like about Systinternal command line tools is that you can run them without a parameter to get a brief description of what it does and how to use it:

Streams v1.56 - Enumerate alternate NTFS data streams Copyright (C) 1999-2007 Mark Russinovich Sysinternals - www.sysinternals.com usage: \utils\SysInternals\streams.exe [-s] [-d]-s Recurse subdirectories -d Delete streams

When I ran streams on my .chm file, I saw the following:

streams.exe SomeSdk.chm Streams v1.56 - Enumerate alternate NTFS data streams Copyright (C) 1999-2007 Mark Russinovich Sysinternals - www.sysinternals.com C:\dev\SomeSdk.chm: :Zone.Identifier:$DATA 26

You can also get a listing of the resource streams if you use the “/R” parameter with the DIR command. To see the contents of the stream, you can open it with notepad with syntax like this:

notepad MySdk.chm:Zone.Identifier

That would display something like this:

[ZoneTransfer] ZoneId=3

Any value of 3 or higher would be considered a remote file. So I ran it one more time, just with the –d parameter and got this:

streams.exe -d SomeSdk.chm Streams v1.56 - Enumerate alternate NTFS data streams Copyright (C) 1999-2007 Mark Russinovich Sysinternals - www.sysinternals.com C:\dev\SomeSdk.chm: Deleted :Zone.Identifier:$DATA

Once I did that, my help file was unblocked and ready to be used.